Click on image to expand

About the Cloaking Workshop

The Cloaking Workshop was a hands-on session designed for artists, designers, and creative practitioners interested in protecting their work from exploitation by generative AI systems.

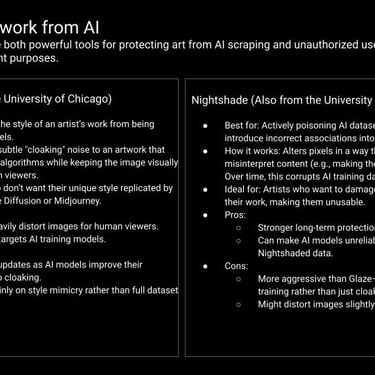

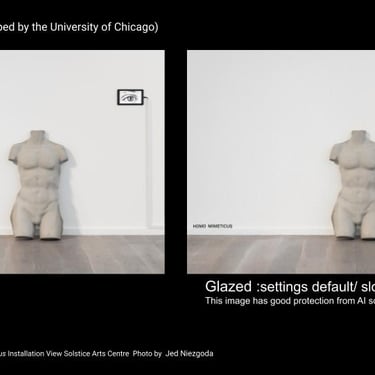

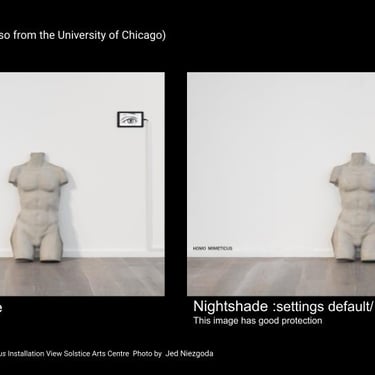

As AI models are increasingly being trained on images scraped from the internet without consent, the workshop introduced cloaking tools such as Glaze and Nightshade, developed by Professor Ben Zhao at the University of Chicago ( see his talk here) which help shield artworks from being mimicked by these systems.

The workshop was co-facilitated by second-year Fine Art Media students from NCAD, who supported both the preparation and delivery as part of their engagement with critical digital practice.

What we covered:

How to work directly with Glaze and Nightshade to apply cloaking techniques .

Consider cloaking as both a practical defence and a conceptual form of resistance.

Conversations about authorship, ethical considerations, and the technical foundations of cloaking tools